New Central Computing System of KEK

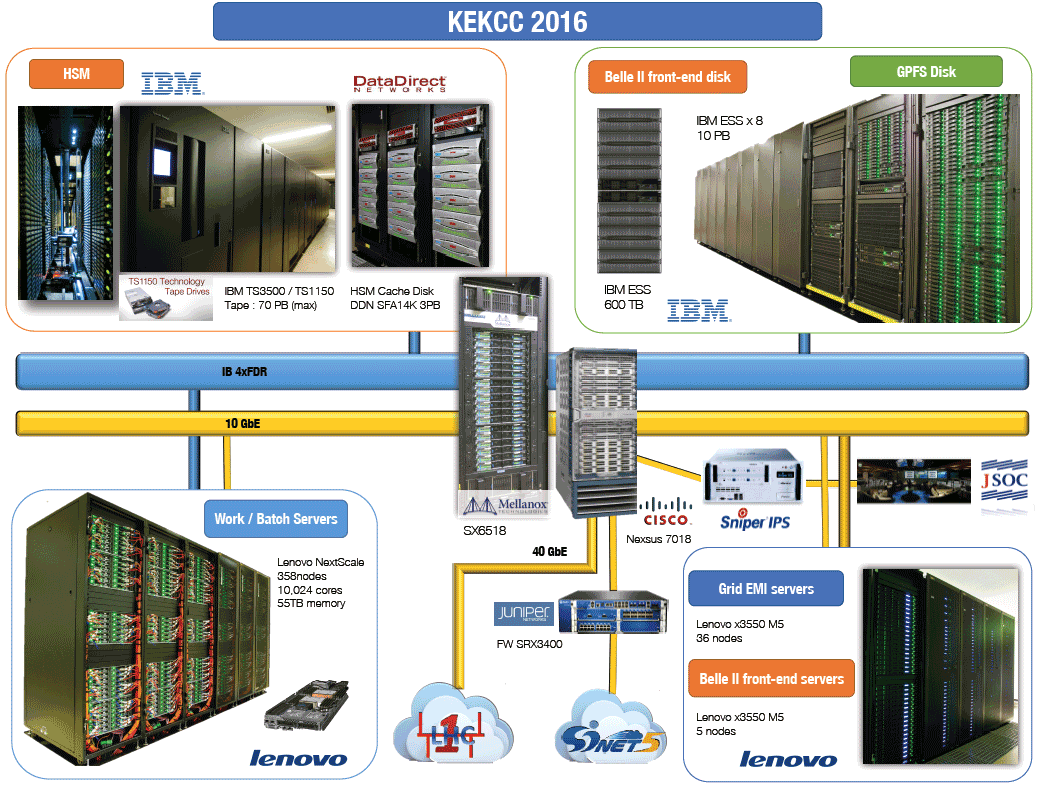

Recently, computing technology is essential for the success of big science projects. For ongoing large-scale experiments of high energy physics (HEP), computing is one of the key technology in addition to accelerator and detector. The KEK central computing system (KEKCC) plays a significant role in the computing for various experiments conducted in KEK, such as the Belle / Belle II, J-PARC experiments, etc. KEK Computing Research Center commits to the purchase and operation of the computing system. The computer system is entirely replaced every 4-5 years through the procurement process, and it was redesigned and build from scratch to meet requirements from experiment groups. FY2016 is the year that we pushed hard on launching the new system. After eight months installation work, the new KEKCC unveiled in September 2016. It is a rental system of the four-years contract until August 2020.

The KEKCC is a complicated set of computing services. The core part consists of login and batch servers, and a large-scale storage system. The storage system is connected to the computing nodes with high speed interconnect that realizes high I/O throughput. The computing resources (CPU and storage) are much enhanced as a recent increase in computing demand. There are about 10,000 CPU cores, 13 PB disk storage, and 70 PB maximum capacity of tape library in the new system. The system resources of CPU and disk storage system was upgraded by 2.5 times and 1.8 times respectively. Fig. 1 shows the overall system description. The KEKCC also provides a set of Grid computing services shared among experiment groups (mainly for Belle II). Grid computing can help distribute data in geographically dispersed sites and share in an efficient way for worldwide collaboration.

Data centers of large-scale experiments need to take into serious consideration for managing a huge amount of data. In KEK, the Belle II experiment requires that several hundred PB data is stored in the KEK site even if we would fully adopt Grid computing technology as a distributed analysis model. The challenge is not only for storage capacity. I/O scalability, usability and power efficiency and so on should be considered to manage the computing system. KEK is the host institute of the Belle II experiment and various J-PARC experiments. We are responsible not only for data store but also data processing. Efficient data processing cycle is crucial for data analysis in experiments. We should support from raw data taking to DST (Data Summary Table) production, and storage of physics data used in the end-user analysis.

The storage system is composed of two types of systems, one is a high-performance disk storage, and the other is a tape library system of its capacity up to 70 PB. The tape system enables to extend its storage capacities far beyond disk storage. Concerning cost efficiency and long-term data preservation, tape technology is still essential in HEP experiments. One of the disadvantages of tape system is that the way of accessing data is not as convenient as a disk system. Hierarchal storage management (HSM) technology is beneficial to hide this demerit and enable seamless data I/O. We adopted the HPSS (High Performance Storage System) for hierarchical storage management for accessing tape data. Data I/O is automatically performed by the disk of the HSM system, which enables to access files on tape in the same way as on disk.

Also in the batch system, we make continuous efforts to improve job throughputs, monitoring jobs, and optimizing queue parameters. As a result, CPU utilization became gradually higher and kept heavily loaded in the system.

The KEKCC system is an essential component for different experiments in KEK. Especially, the next Belle II experiment is our primary target. KEK CRC will make continuous efforts on supporting Belle II computing. We hope that the new system could contribute to the success of the Belle II experiment.